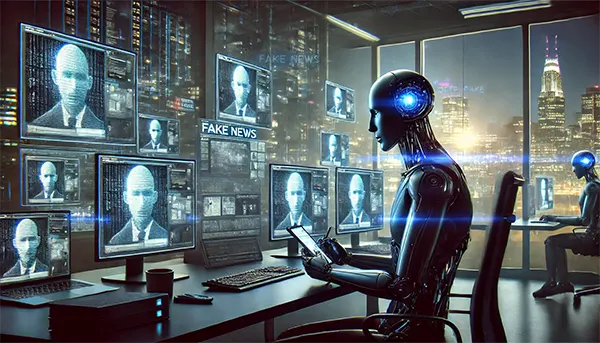

Artificial Intelligence in Black Marketing: How Bots Create and Spread Disinformation in 2025

Artificial intelligence (AI) has revolutionised many industries, but in 2025, it has also become a powerful tool in the realm of black marketing. Automated systems, particularly AI-driven bots, are being leveraged to create and spread disinformation, influencing public opinion, markets, and even political landscapes. This article delves into the methods used, the scale of the problem, and the potential countermeasures.

The Rise of AI-Generated Disinformation

AI-powered disinformation campaigns are no longer the work of lone hackers or small groups; they are now orchestrated by sophisticated networks. These networks use machine learning models to generate misleading content, spread false narratives, and manipulate public perception on a massive scale.

One of the most concerning developments is the use of generative AI to create hyper-realistic deepfakes. These AI-generated videos and images can convincingly impersonate individuals, spreading false statements or actions that never occurred. The implications for politics, finance, and personal reputations are immense.

Social media platforms are primary targets for AI-driven misinformation campaigns. Advanced bots, equipped with natural language processing (NLP), interact seamlessly with users, generating and amplifying content that appears credible. This makes distinguishing between real and fake information increasingly difficult.

How AI Bots Operate

AI bots operate through sophisticated automation, combining deep learning with social engineering techniques. These bots do not simply post generic messages; they analyse trends, detect viral topics, and tailor their disinformation to blend seamlessly with ongoing discussions.

Some bots utilise reinforcement learning to optimise engagement strategies. They test different messages and measure reactions to refine their approach, making disinformation campaigns more effective over time. This ability to adapt ensures that misleading content remains persuasive.

AI-powered bots also infiltrate closed online communities. By gaining trust within groups, they manipulate discussions, reinforcing disinformation narratives and making them appear more legitimate among targeted audiences.

The Role of AI in Market Manipulation

Financial markets are particularly vulnerable to AI-driven disinformation. Stock prices can be influenced by false reports, and cryptocurrencies remain a prime target for manipulation. AI-generated fake news has the power to cause massive market fluctuations.

Automated trading algorithms, which react to news sentiment, can be misled by AI-created false information. This can lead to artificial price inflation or sudden crashes, affecting investors and businesses alike.

AI bots also engage in reputation attacks against companies. Negative reviews, fabricated scandals, and falsified user complaints can damage brand credibility, forcing businesses to invest heavily in counteracting such tactics.

Combating AI-Generated Disinformation

Governments and tech companies are developing advanced detection mechanisms to combat AI-generated disinformation. Machine learning models are being trained to identify synthetic media, helping to flag misleading content.

Blockchain technology is emerging as a potential solution for content authentication. By verifying the origin and authenticity of digital content, blockchain can serve as a powerful tool against AI-driven manipulations.

Public awareness and digital literacy campaigns are crucial in the fight against disinformation. Educating users about the presence of AI-driven bots and their tactics can reduce susceptibility to manipulated content.

Future Challenges and Ethical Concerns

As AI technology advances, so do the tactics used in black marketing. The arms race between AI-driven disinformation and detection tools is intensifying, making it crucial for regulatory frameworks to evolve accordingly.

There are ethical concerns regarding AI-generated content moderation. While automated systems help detect disinformation, they also risk suppressing legitimate speech if misapplied. Striking the right balance is a significant challenge.

International cooperation is necessary to address the global nature of AI-driven disinformation. Governments and cybersecurity organisations must work together to create policies that mitigate the risks while preserving digital freedoms.

The Future of AI and Information Integrity

The future of AI in black marketing will depend on how effectively society can regulate and counteract its misuse. While AI presents risks, it also offers solutions for identifying and neutralising disinformation.

Developing transparent AI models with explainable decision-making processes can help distinguish genuine content from manipulated narratives. Increased investment in AI ethics will be crucial in shaping the digital landscape.

Ultimately, ensuring information integrity in the digital age requires a combination of advanced technology, regulatory oversight, and public vigilance. By staying informed and adopting robust countermeasures, society can mitigate the impact of AI-driven disinformation.

Similar news

-

Clone Landing Pages and Domain Spoofing: How Br...

Clone Landing Pages and Domain Spoofing: How Br...Clone landing pages and lookalike domains remain one of the …

-

“Negative PR as a service”: what commercial sme...

“Negative PR as a service”: what commercial sme...The phrase “negative PR as a service” is used for …

-

How Fake Review Networks Operate in 2026: Real ...

How Fake Review Networks Operate in 2026: Real ...Fake review networks have moved far beyond “a few paid …